EMOTE

Emotional Speech- Driven Animation with Content- Emotion Disentanglement

SIGGRAPH Asia 2023 (Conference)

EMOTE = Expressive Model Optimized for Talking with Emotion

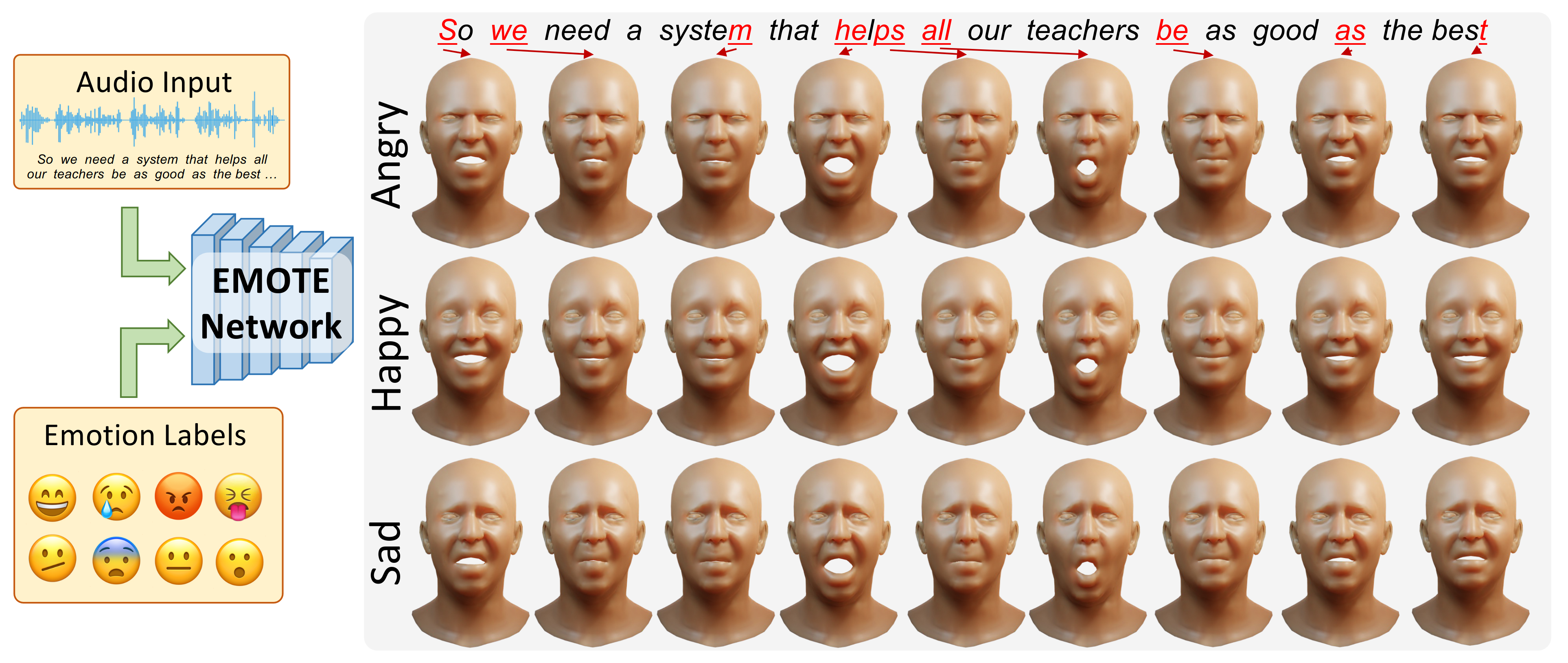

Given audio input and an emotion label, EMOTE generates an animated 3D head that has state-of-the-art lip synchronization while expressing the emotion. The method is trained from 2D video sequences using a novel video emotion loss and a mechanism to disentangle emotion from speech.

Code and model are available in through the github repo.

Training code and data coming soon.@inproceedings{EMOTE, title = {Emotional Speech-Driven Animation with Content-Emotion Disentanglement}, author = {Daněček, Radek and Chhatre, Kiran and Tripathi, Shashank and Wen, Yandong and Black, Michael and Bolkart, Timo}, publisher = {ACM}, month = dec, year = {2023}, doi = {10.1145/3610548.3618183}, url = {https://emote.is.tue.mpg.de/index.html}, month_numeric = {12}}